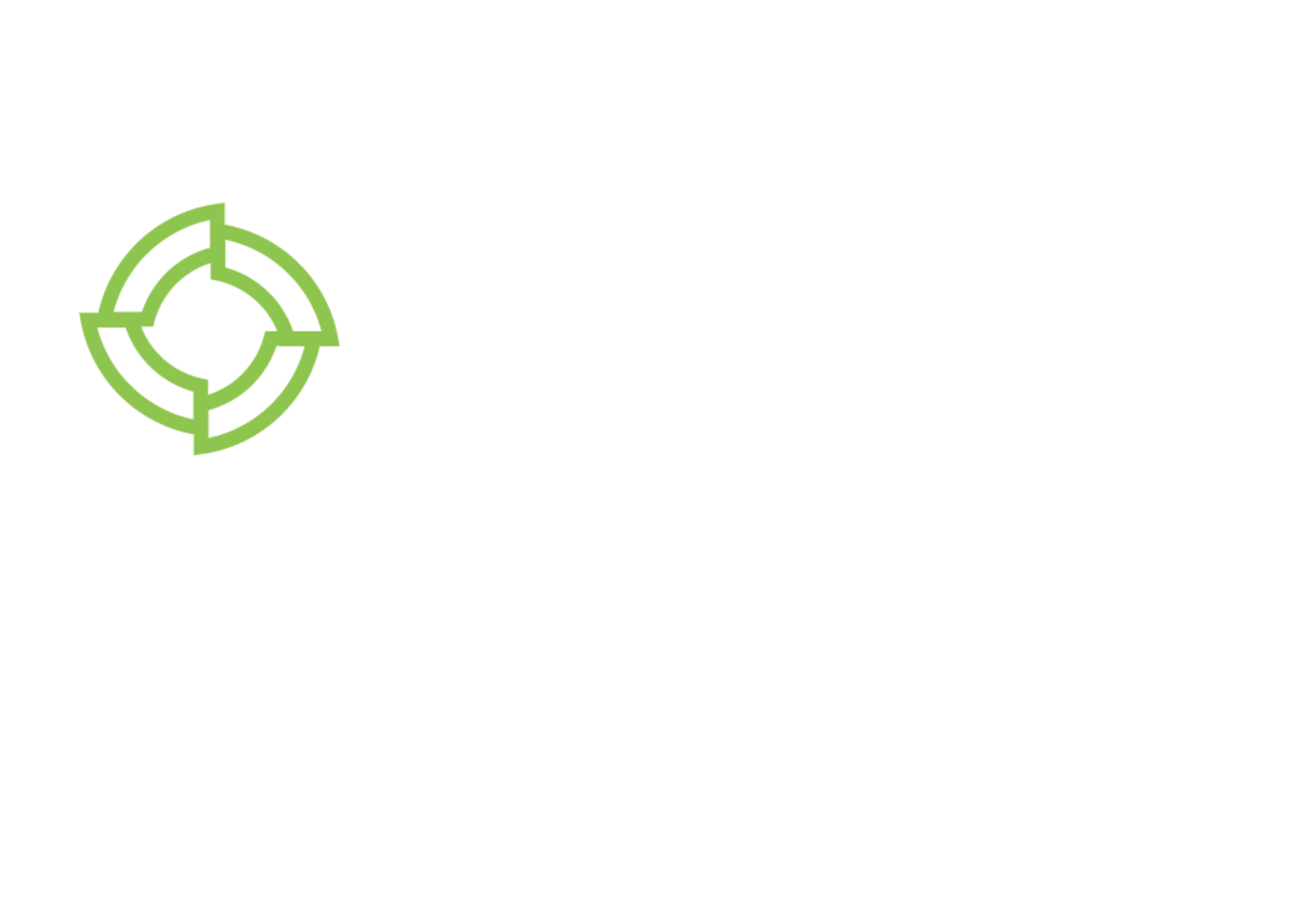

MLOps, short for Machine Learning Operations, represents a comprehensive framework designed to optimize the lifecycle of machine learning (ML) and artificial intelligence (AI) solutions. It encompasses a range of practices, tools, and methodologies aimed at enhancing the efficiency, reliability, and scalability of ML/AI development and deployment processes. At its core, MLOps fosters seamless collaboration between data scientists, machine learning engineers, and operations teams, enabling them to work cohesively throughout the entire model life cycle.

In this blog, we will explore how, by embracing MLOps principles, organizations can significantly accelerate the pace of model development and production. Integrating security and privacy considerations into MLOps practices ensures that organizations meet the highest standards of quality and performance.

The Role of MLOps in Innovation

Central to the MLOps paradigm is continuous integration and deployment (CI/CD) practices into ML/AI workflows. Through CI/CD pipelines, organizations can automate key aspects of the model development process, including training, testing, and deployment, thus reducing manual overhead and minimizing the risk of errors. Moreover, MLOps emphasizes the importance of robust monitoring, validation, and governance mechanisms to safeguard the integrity and reliability of ML models in production environments. By implementing comprehensive monitoring solutions, organizations can continuously track the performance and behavior of deployed models, proactively identifying and addressing issues as they arise. Additionally, rigorous validation processes ensure that models meet predefined performance criteria and regulatory requirements before being deployed into production.

In essence, MLOps represents a paradigm shift in the way organizations approach ML/AI development and deployment. By promoting collaboration, automation, and accountability, MLOps enables organizations to unlock the full potential of their ML/AI initiatives, driving innovation and delivering tangible business value. According to Deloitte, organizations that strongly adhere to MLOps processes are three times more likely to achieve their goals and deliver AI in a trustworthy way.

If your workplace is leaning into MLOps to effectively utilize machine learning (ML), you are in good company. In a recent survey by Clear ML of U.S.-based ML decision makers, 85% of respondents had a dedicated budget for MLOps in 2022. That number is growing: an additional 14% of respondents expected to have funding for MLOps in 2023, and the rise of generative AI will further spur the growth of MLOps. While MLOps is becoming mainstream, it comes with risks that are often the flip side of its benefits.

The Risks of MLOps

The explosive growth of ML (the global ML market is predicted to grow almost 40% annually, topping $200 billion by 2030) and generative AI brings value to companies – but also creates new challenges for developers and security teams. As MLOps matures, companies must grapple with the novel risks that ML introduces.

ML is innovative, which means it introduces new data structures and development processes. The complexity of ML, including long supply chains for foundational models and open-source ML components, compounds these challenges.

Companies often benefit from using ML with sensitive data. For example, a healthcare provider might have personally identifiable information (PII) about patients that could contribute to future medical research. A financial institution might have information about customers that could help to identify financial fraud. An ML system that uses sensitive data, including training data, requires extensive protection, given that the data can often be recovered by attackers in a variety of ways.

MLOps pipelines are vulnerable to attacks by insiders, software supply chain manipulation, and compromised systems. The U.K.-based Institute for Ethical AI & Machine Learning has created The MLSecOps Top 10, which aims to describe the most important vulnerabilities in the ML life cycle. Some of these (with our descriptions) are:

- Insecure ML systems/pipeline design

The complexity of the ML pipeline, from gathering and processing data to testing and deployment, can increase the attack surface, especially if it is not properly secured. - Data & ML infrastructure misconfigurations

Misconfigurations that affect, for instance, whether confidential computing is automatically enabled in a data collaboration scenario, profoundly affect the security of an organization’s infrastructure. - Supply chain vulnerability in ML code

A supply chain attack can occur if, for example, untrusted third party code allows an attacker to modify or replace a machine learning library or model that is used by the system.

The list also includes unrestricted model endpoints, access to model artifacts, CI/CD integrity failures, and more.

Securing MLOps

MLOps exists because the development, deployment and maintenance of ML require unique approaches. ML security similarly requires a unique approach.

As referenced above, one lens for looking at MLOps security is MLSecOps, which – like DevSecOps – is based on the premise that security should be integrated throughout the life cycle. When working to secure an MLOps pipeline that involves sensitive data, here are some of the most important issues to keep in mind:

- Address the entire pipeline

MLOps pipelines are long and complex. An ML pipeline includes many stages: data extraction and preparation, model training and tuning, analysis, validation, deployment, and finally feedback. Privacy and security could be compromised at any point – OWASP’s Top Ten 10 ML Security list gives a sense of what could go wrong. In the beginning stages, data poisoning by an attacker can cause the model to behave in undesirable ways. Later, an inference attack or model inversion attack can expose sensitive data, while model theft can reveal IP from the model itself. Prompts and outputs can also be manipulated by bad actors. - Look at governance and compliance

When companies use personal data with an ML model, it is subject to government regulations like the EU’s GDPR and California’s CCPA. The recently passed EU AI Security Act is the first in what will almost certainly be a series of new regulations explicitly addressing AI and ML. Forward-thinking organizations will manage regulatory risk in part by exploring new technologies for securing data and models and fostering transparency and trust. - Track the supply chain

Like a software supply chain, an ML supply chain is composed of multiple components, including data, code, tools, and processes. Often ML models include both open-source, proprietary, and third-party components. A software bill of materials (SBOM) can help companies track components and make sure security is optimized. - Focus on data

ML models are only as good as their data. And data is one of the most vulnerable aspects of any MLOps pipeline. Organizations need to ensure that data is always in the right location – as it can end up dispersed across clouds, storage containers, and various systems. And it is crucial that data be protected in all three states: at rest, in transit, and in use (during computation). Collaboration on data within and across organizations introduces additional security challenges across the MLOps pipeline that must be addressed – but the results may be worth the effort. - Adapt for LLMs

When it comes to generative AI, many of the principles and best practices of MLOps apply to Large Language Models (LLMs). Yet some key differences are important to consider in order to effectively secure LLMOps pipelines. For example, due to the computational demands of training and fine-tuning LLMs, specialized hardware like GPUs are often used for faster data-parallel operations. And while many traditional ML models are trained from scratch, organizations often take pre-trained (foundation) LLMs that they fine-tune with new data. These differences in hardware and use of third-party models introduce additional security and privacy considerations.

Strategic Ecosystems for MLOps

According to a survey on AI from Deloitte, 83% of high-achieving organizations have two or more types of ecosystem partners. While simplicity is sometimes preferable, in this case organizations with diverse ecosystems are more likely to be successfully leveraging AI as a strategic differentiator.

In the area of MLOps, ecosystem partners with innovative solutions can help ensure regulatory compliance and customer trust. For example, confidential computing is a powerful security framework designed to protect sensitive data while in use, within applications, servers, or cloud environments. Confidential computing has the potential to secure the deployment and monitoring of an MLOps pipeline for LLMs.

During inference, confidential computing isolates data in a hardware-based trusted execution environment (TEE). The TEE is a secure enclave where sensitive computations can occur, employing hardware-based mechanisms to prevent unauthorized access and tampering.

To learn about Inpher’s SecurAI confidential computing solution and other privacy-preserving solutions for MLOps, visit our website.