Today, AI and machine learning (ML) algorithms are growing ever more powerful. While their uses and applications are seemingly unlimited, they can have a significant impact in specific industries such as finance, healthcare, and manufacturing. ML algorithms create representations of the world on which to base predictions – yet the complexity that enables these valuable capabilities also makes the results from these AI systems difficult to explain.

This opacity is particularly problematic in high-risk applications when automated decisions can significantly affect people’s lives (such as in the industries mentioned above). As a result, model explainability, also referred to as Explainable AI (XAI), is increasingly becoming an ethical and regulatory imperative. In this blog post, we will further explore Explainable AI, including regulations and techniques, and the importance of privacy-preserving explainability.

What Is Explainable AI?

In a black box system, inputs and outputs can be viewed; however, the inner logic of the model is highly complex, making the decisions that lead to the model’s output difficult for people to interpret. This lack of transparency in AI systems is called the black box problem – which Explainable AI is intended to address.

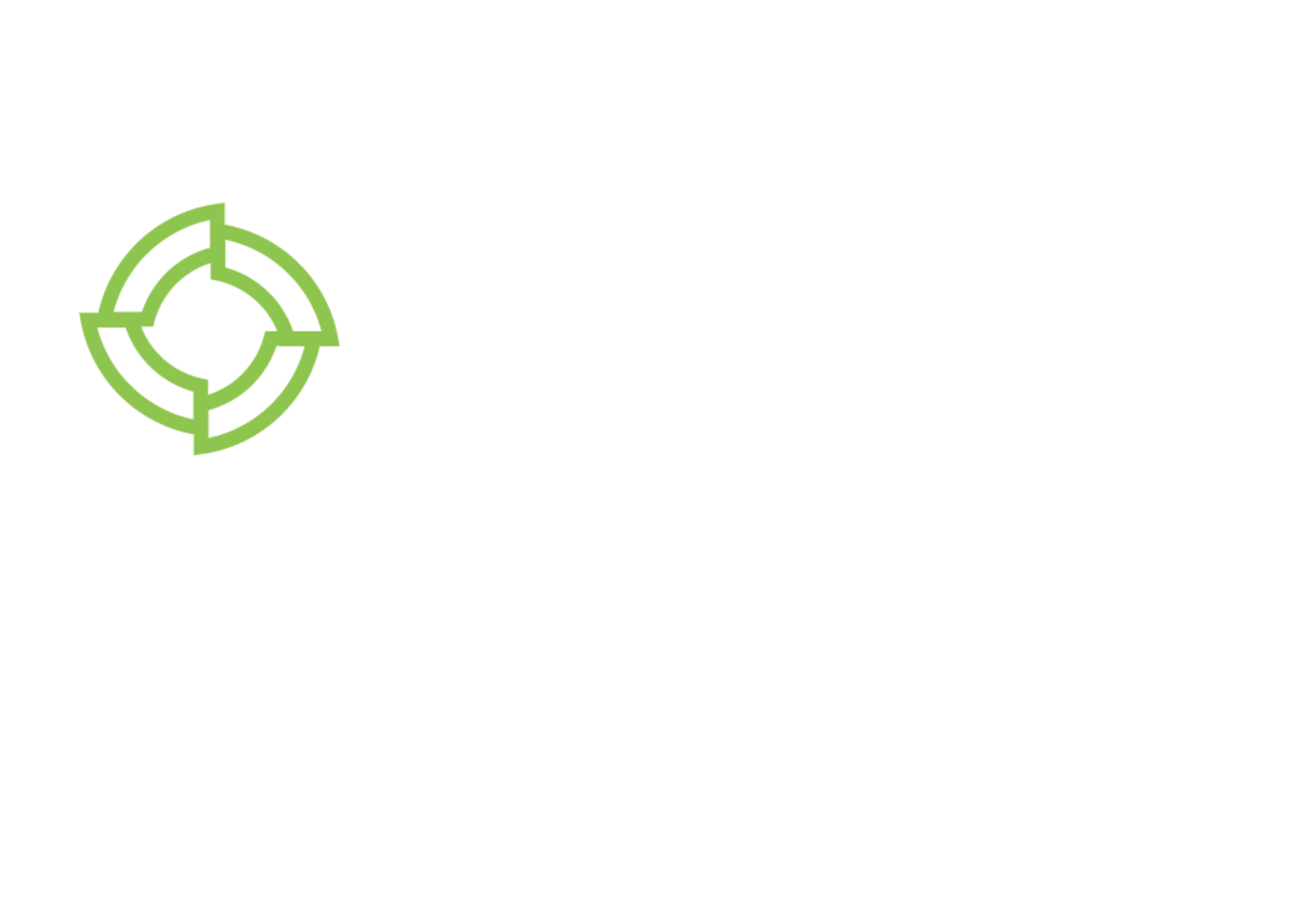

Explainable AI refers to the ability to understand and interpret the decisions made by an ML model. It makes AI more trustworthy by providing insights into how a model generates its predictions, the features it considers important, and the reasoning behind the decisions.

When Is Explainable AI Important?

AI and especially ML models often involve a tradeoff between accuracy and explainability: the simpler the model, the easier it is to explain (e.g., regression models); yet, simpler models often involve an accuracy tradeoff compared to more complex ones. Moreover, computing model explainability metrics can be quite expensive.

Explainable AI is most relevant when automated decisions have significant, even life-changing impacts. For example, a few years ago FICO ran the Explainable Machine Learning Challenge, a challenge based on a real financial dataset. The challenge focused on evaluating the explanations generated by the participants. The FICO Score is used in more than 90% of lending decisions in the U.S., and regulators require that financial institutions provide reasons to customers when taking actions such as turning down a loan.

Regulatory Requirements

Increasingly, organizations must address Explainable AI in order to comply with regulations.

In the European Union (EU), the General Data Protection Regulation (GDPR) states in Articles 13-14 and 22 that data controllers should provide information on “the existence of automated decision-making, including profiling” and “meaningful information about the logic involved, as well as the significance and the envisaged consequences of such processing for the data subject.”

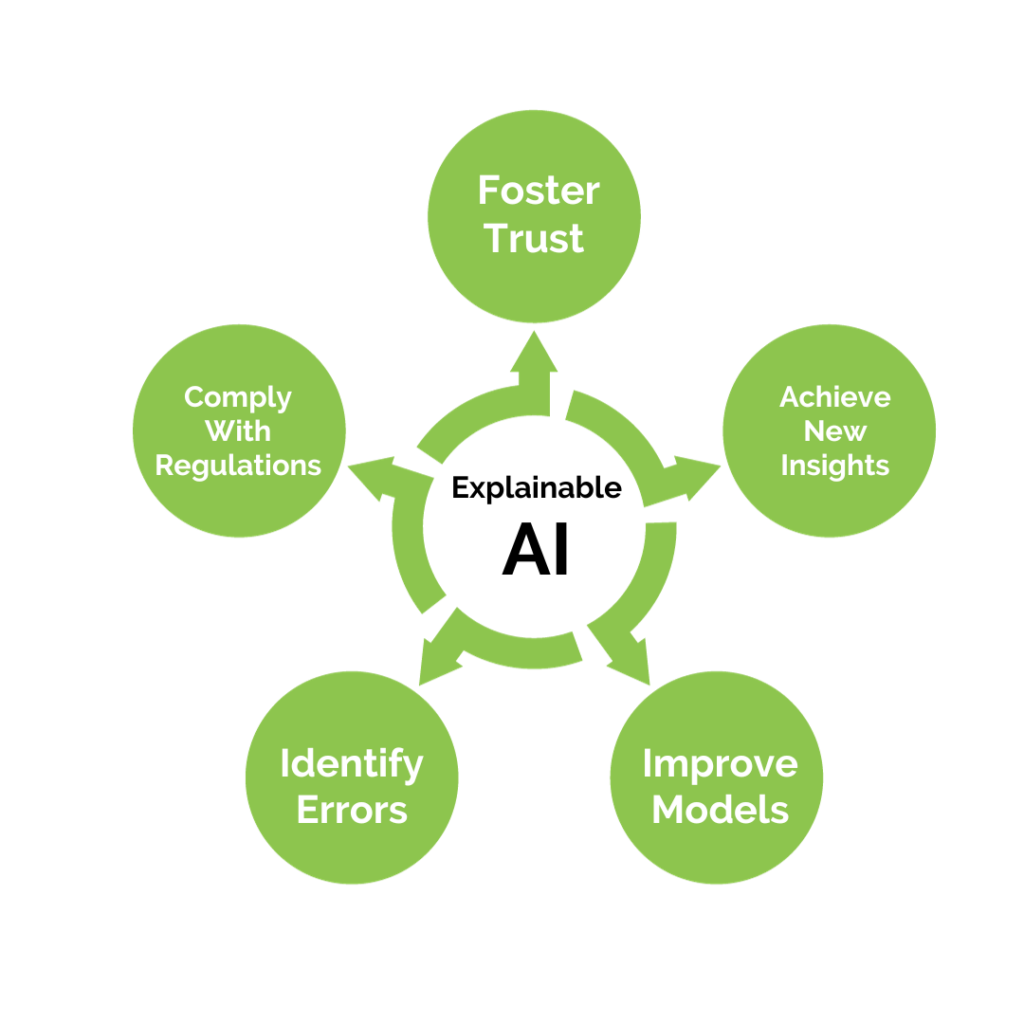

More recently, the proposed EU AI Act is a regulatory framework to ensure the use of Trustworthy AI, which includes explainability. As a generic concept, Trustworthy AI encapsulates various principles and guidelines to address the following objectives:

- Ethical and responsible AI

- Transparency and explainability

- Fairness and bias mitigation

- Robustness and reliability

- Privacy and data protection

- Accountability and governance

In the U.S., Trustworthy AI is emphasized in President Biden’s recently issued Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. Even in the absence of regulatory constraints, forward-thinking organizations are considering how Explainable AI fits into their AI strategy.

Techniques and Apporaches

There are several approaches to Explainable AI:

- Feature importance – determining the relative importance/contribution of each feature in the prediction of a model

- Local explainability – providing explanations for individual predictions, showing which features had the most significant impact on the specific instance

- Rule extraction – deriving interpretable rules or decision trees that mimic complex machine learning models.

In particular, techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) provide methods for generating explanations at the instance level considering contributions of individual features.

In the context of the EU AI Act, using techniques like SHAP values can help fulfill the requirement of providing meaningful explanations for AI decisions. By understanding the SHAP values, stakeholders can gain insights into how specific features influence the model’s predictions and make informed judgments about the fairness, bias, or potential risks associated with the AI system.

Privacy-Preserving Explainability

While explainability metrics are usually computed on public input data and models, explainability can be just as important – if not more so – for private input data. Industries such as finance, healthcare, and manufacturing are increasingly looking to leverage sensitive data to achieve insights and support decision-making; machine learning is often the best way to do so. For example, in a growing number of cases, data scientists are using privacy-enhancing technologies (PETs) to securely compute on distributed data. Explainability can help make ML a viable option in high-impact applications with private data.

At Inpher, we have developed XorSHAP, a general purpose privacy-preserving algorithm for computing Shapley values for decision tree models in the Secure Multiparty Computation (MPC) setting. Our algorithms scale to real-world datasets. Additionally, it is parallelization-friendly, thus enabling future work on hardware acceleration with GPUs. By supporting Explainable AI, XorSHAP is an integral part of the XOR Platform, which delivers enterprise-ready data science capabilities to develop, test, integrate, and deploy cryptographically secure workflows.

Conclusion

Industries from finance to healthcare to manufacturing can benefit from powerful AI systems that analyze large datasets to generate predictions. Yet when working on high-risk applications, the black box of many ML models presents a problem. Explainable AI can help organizations align with ethical and regulatory imperatives in order to unleash the potential of their data, including private data.