Part 1: What is ethical AI?

How many of you have Googled, ‘what is ethical AI’? According to Wikipedia, ethical AI, or how they categorize it the ethics of artificial intelligence, is the branch of the ethics of technology specific to artificial intelligence systems. The ethics of artificial intelligence covers a broad range of topics within the field that are considered to have particular ethical stakes.

Understanding ethical AI is not the same as the commitment to institutionalizing a corporate operational standard on how you will leverage AI to promote better ethical behaviors on known information in-the-wild, or in this case, data. Massive volumes of critical, meaningful data can assist any company in acting responsibly, or more importantly ethically.

In this blog we will look at what we know about ethical AI, how the technology market is responding to ethical AI, are we doing enough, and can we revolutionize how we incorporate ethical AI standards by leveraging innovative approaches in tech.

What we know about Ethical AI

Ethical AI refers to the development and deployment of artificial intelligence systems that prioritize ethical considerations, such as fairness, transparency, accountability, privacy, and safety. Here are some key aspects and considerations regarding ethical AI:

- Fairness and Bias Mitigation: One of the central concerns in AI ethics is ensuring fairness in algorithmic decision-making. AI systems should be designed to mitigate biases and ensure equitable treatment across different demographics.

- Transparency and Explainability: AI systems should be transparent, and their decisions should be explainable to users and stakeholders. This helps build trust and understanding of AI systems’ behavior and enables users to challenge decisions when needed.

- Accountability and Responsibility: There should be clear accountability for the outcomes of AI systems, with identifiable individuals or organizations responsible for their development, deployment, and maintenance.

- Privacy and Data Protection: AI systems often rely on large amounts of data, raising concerns about privacy and data protection. Ethical AI frameworks prioritize the protection of individuals’ privacy rights and ensure that data is collected and used responsibly.

- Safety and Robustness: AI systems should be designed to operate safely and reliably, minimizing the risk of harm to users and society. This includes considerations of physical safety in robotics applications as well as ensuring robustness against adversarial attacks and unintended consequences.

- Human-Centered Design: Ethical AI involves designing systems with a focus on human values and well-being. This includes considering the societal impacts of AI deployment and actively involving stakeholders, including affected communities, in the design process.

- Regulation and Governance: There is growing recognition of the need for regulatory frameworks and governance mechanisms to ensure the ethical development and deployment of AI. These may include laws, standards, guidelines, and industry best practices.

- Global Collaboration and Awareness: Given the global nature of AI development and deployment, international collaboration and awareness are crucial for addressing ethical challenges effectively. This includes sharing best practices, coordinating regulatory efforts, and fostering dialogue among stakeholders from different sectors and regions.

- Continuous Monitoring and Evaluation: Ethical AI requires ongoing monitoring and evaluation of AI systems’ impacts and performance to identify and address ethical concerns as they arise. This includes mechanisms for auditing algorithms and assessing their societal implications.

- Ethical Decision-Making Frameworks: Organizations developing AI systems should adopt ethical decision-making frameworks to guide their development processes and ensure that ethical considerations are integrated into all stages of AI system development.

Overall, ensuring the ethical development and deployment of AI requires a multidisciplinary approach involving collaboration among researchers, policymakers, industry stakeholders, and civil society to address complex technical, social, and ethical challenges.

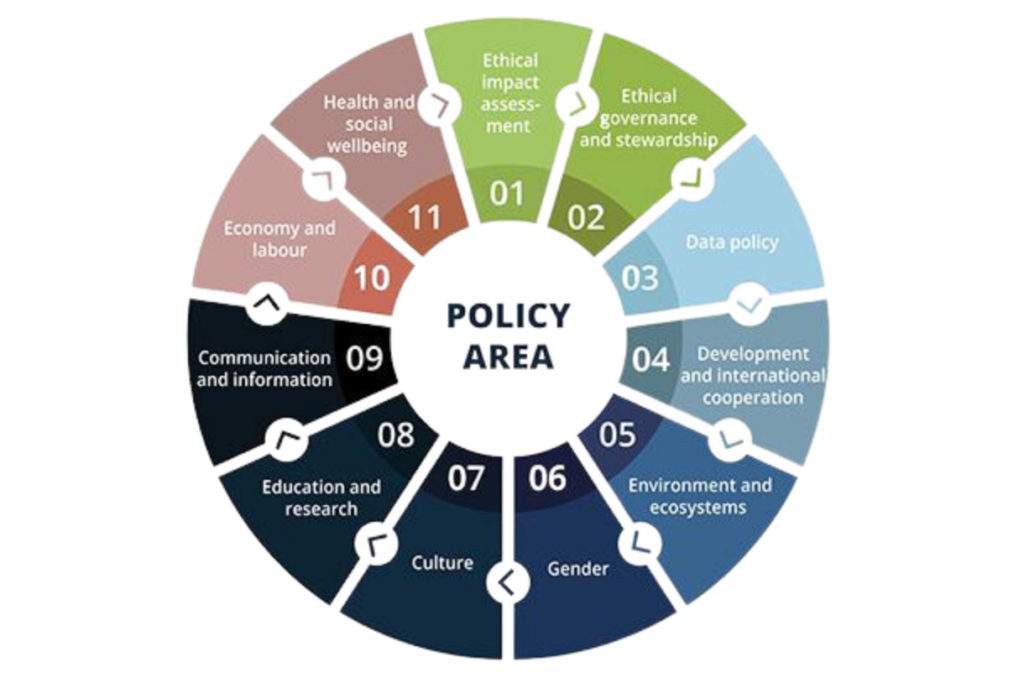

The United Nations Educational, Scientific and Cultural Organization, more commonly referred to as UNESCO, positions themselves as an organization that contributes to peace and security by promoting international cooperation in education, sciences, culture, communication, and information. In their efforts to promote knowledge sharing and the free flow of ideas to accelerate mutual understanding and a more perfect knowledge of each other’s lives – they recently held their 2nd Annual Global Forum this past February on the Ethics of AI, specifically focused on changing the landscape of AI governance. Rooted on their initial report which was released in 2021, UNESCO Recommendation on the Ethics of Artificial Intelligence, it offers recommended policy areas for Ethical AI.

UNESCO recommended policy areas for Ethical AI

Feel free to peruse this expensive report yourself, but the persistent theme for me and key takeaways from it point to a new world where we want to leverage AI but have a regulatory push to embrace technology in support of keeping it ethical.

Part two of our series delves into data utility in ethical AI and the contributions from the tech industry.